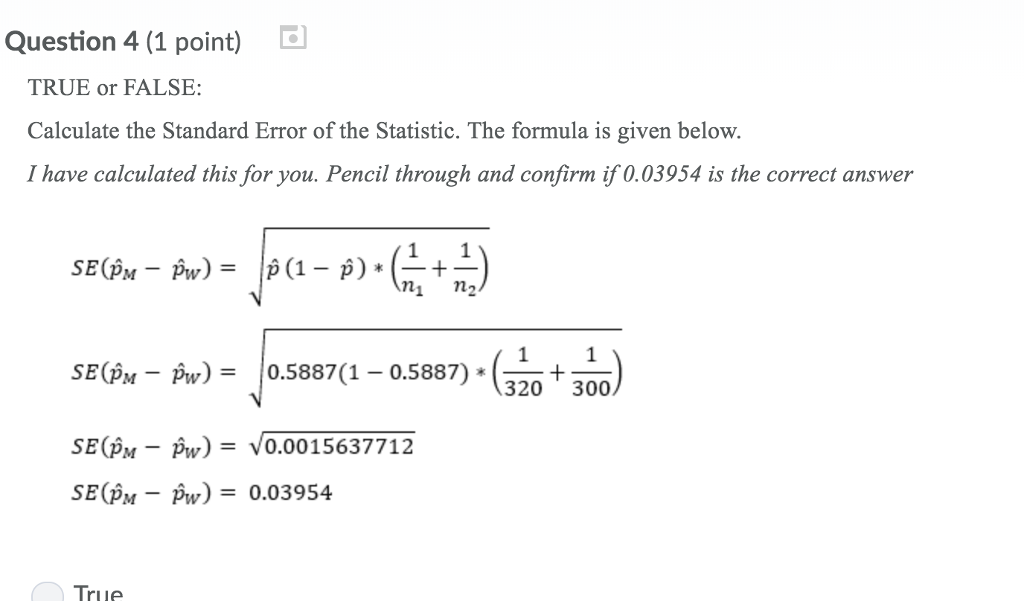

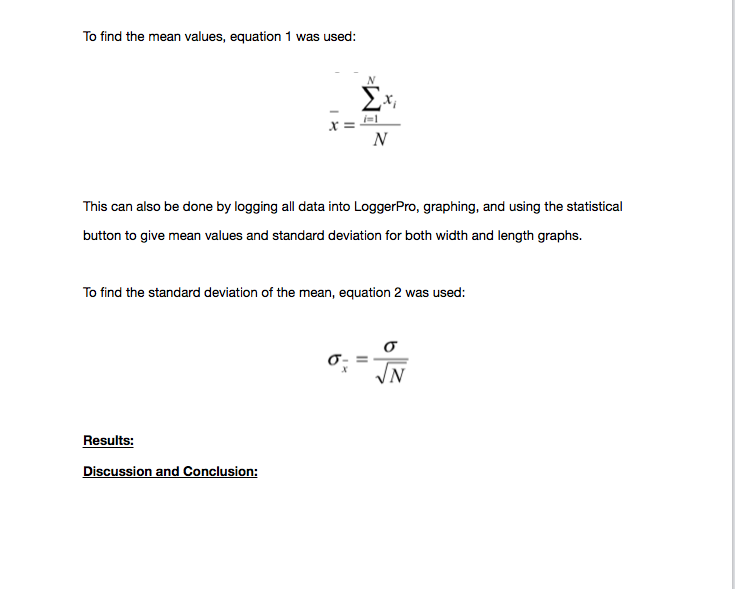

Starting with version 8.2, Prism will only show the SE if you request symmetrical confidence intervals. Selva is the Chief Author and Editor of Machine Learning Plus, with 4 Million+ readership. These are not computed from the SE values, so it makes no sense to report both the SE and the asymmetrical confidence interval. We recommend reporting asymmetrical profile likelihood intervals, which are more useful. These intervals are computed from the SE values, so it makes sense to display them. Starting with Prism 8.2, Prism will only compute and display the SE of parameters if you ask Prism (in the Confidence tab) to report symmetrical confidence intervals. Why doesn't Prism display SE values for my fit? When the SE value is preceded by ~, the corresponding confidence intervals are shown as "very wide" with no numerical range (the range would be infinitely wide). The standard error of those parameters is not well defined. In other words, many combinations of parameter values lead to curves that fit equally well. But when the fit is 'ambiguous', changing other parameters can move the curve so it is near the data again. Changing the value of any parameter will always move the curve further from the data and increase the sum-of-squares. If a value is preceded by ~, it means the results are 'ambiguous'. Why is there a ~ symbol in front of some SE values? Prism (and most programs) calls that value a standard error, but some others call it a standard deviation. Although we may establish a confidence interval at any level (70, 92, etc.), three levels are commonly used: Confidence level Confidence interval (mean ±sampling error) 68 mean ±(1.0) x (SE) 95 mean ± (1.96) x (SE) 99 mean. The standard error of a parameter is the expected value of the standard deviation of that parameter if you repeated the experiment many times. level, we would say that we are 95 certain that the true population mean (µ) is between 32.5 and 41.5 minutes. When applied to a calculated value, the terms "standard error" and "standard deviation" really mean the same thing. The SEM can be thought of as "the standard deviation of the mean" - if you were to repeat the experiment many times, the SEM (of your first experiment) is your best guess for the standard deviation of all the measured means that would result. The SEM tells you about how well you have determined the mean. The SD tells you about the scatter of the data. When you look at a group of numbers, the standard deviation (SD) and standard error of the mean (SEM) are very different. Both terms mean the same thing in this context. Prism reports the standard error of each parameter, but some other programs report the same values as 'standard deviations'. 'Standard error' or 'standard deviation' ? The calculation of the standard errors depends on the sum-of-squares, the spacing of X values, the choice of equation, and the number of replicates. Otherwise, we suggest that you ask Prism to report the confidence intervals only (choose on the Diagnostics tab). If you want to compare Prism's results to those of other programs, you will want to include standard errors in the output. The only real purpose of the standard errors is as an intermediate value used to compute the confidence intervals. Then you need to subtract each observation individually from the mean value.Interpreting the standard errors of parameters So you can use the formula we have provided above to calculate the mean of the data. For calculating mean deviation, you first need to calculate the mean value of the data. The mean deviation states how close or scattered are the data points from the mean value of the observations. The standard error of the mean, also called the standard deviation of the mean, is a method used to estimate the standard deviation of a sampling distribution.

There are various methods to calculate the measures of dispersion of the observations from the central tendencies. So it is the measure of the dispersion of the observations around the central observation or means of the data.

Mean deviation is the numerical value of the difference of the observations from the mean value of the data.

The formula for calculating the mean for ungrouped data is :

0 kommentar(er)

0 kommentar(er)